Torque sensing

Twists in the tale

Ryan Maughan explains the pros and cons of the various ways to measure motor torque

As the market for electrified vehicle powertrains develops, so the quest to deliver improved efficiency and performance in electric drive systems continues. Maximising vehicle range for a given battery capacity is a key target of every powertrain development programme, while maintaining and improving the powertrain system’s safety integrity is paramount.

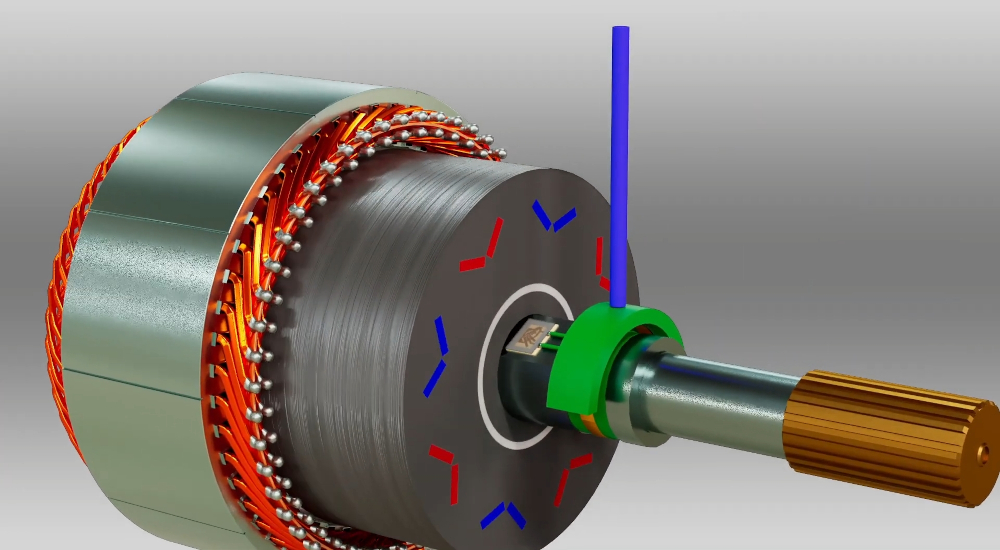

Most automotive motor inverters use a range of sensors to monitor and control the traction motor. A typical sensor scheme comprises a rotor position sensor, which for propulsion applications is usually a position resolver that uses magnets mounted on the motor’s shaft, and Hall effect sensors to create a sine and cosine voltage waveform with the purpose of reporting the rotor position in relation to the stator and rotational speed.

An alternative to magnetic sensors uses inductive sensing elements to create the same waveforms. This absolute position signal tells the motor controller where the motor’s rotor is relative to the stator phases and its rotational speed.

In addition to speed and position, another critical measurement is temperature, and typically a number of temperature sensors are embedded into the motor’s stator to to ensure that the stator’s temperature does not exceed design parameters. Rotor temperature is also critical, but because it is difficult to measure temperature on the rotating part it is not directly measured; instead its relationship with stator temperature measurement is established through simulation and experimental measurements.

Motor phase currents are also measured, using several current sensors inside the motor controller; they are usually Hall effect devices that can measure current from the magnetic fields created by the electric current as it flows through the conductors. This method is preferred for high-efficiency motor drives over the use of shunt resistors, which while providing a relatively simple and low-cost solution to obtaining accurate current measurements, incur significant power losses over the shunt itself.

Motor torque is typically controlled by an inner current control loop. In its most simplistic guise, this comprises a feedback loop, where the measured motor phase current is compared to current demand, and an error/correction value is calculated. This value is used to modify the PWM generator that in turn modulates the DC link voltage to the motor in a sinusoidal manner, effectively inducing current into the machine’s stator windings.

This induced current is proportional to the magnetic flux generated in the stator’s windings, which in turn is proportional to the torque generated. The controller will source as much current as is needed to hit the requested torque input to the drive (assuming the controller is tuned correctly).

Sensorless control, as opposed to the classical sensored control schemes described above, differ as no position sensor is used to determine positional feedback of the rotor; instead it can be calculated by analysing the measured back-EMF on the motor phases. Sensorless control is deployed primarily to reduce cost at the expense of accuracy, but the reduced control fidelity – particularly at low rotational speeds – and resulting safety integrity mean they are not feasible for traction motor applications.

Speed/position encoders or resolvers vary in cost depending on specification, accuracy resolution and environmental performance. Depending on the requirement, the cost of position encoders or resolvers can be particularly significant in the overall bill of materials of the machine. Because it is a key part of the functional safety control system in traction applications, accuracy and reliability are critical, and more costly resolver technology is required than for less safety-critical applications.

In order to estimate the torque in an electrical machine, the torque constant (Kt) must first be obtained by a combination of model-based simulation and physical measurements, the latter being time-consuming. We can say that the torque of an electrical machine is proportional to magnetic flux, which in turn is proportional to current.

If the current flowing into the motor from the inverter drive can be accurately measured, the torque of the machine can be calculated. However, in reality, for accurate torque measurement, this is not the case as there are many factors that make this method of torque estimation less than ideal.

The main variables that affect the torque constant of an electrical machine can be split into two distinct categories. The first are design factors such as saturation, magnetic loading, and temperature that affects parameters such as the magnetic flux density (Br) in the air gap. Because the motor torque constant (KT) and voltage constant (KE) are linked to the Br in the air gap between the rotor and the stator, Br will change with an increase in magnet temperature depending on the magnet properties, rotor material permeability and machine loading factors, so it is a continually varying property.

This challenge remains with the addition of induced magnetic fields from reluctance effects in IPM geometries or other machine technologies such as switched reluctance and induction motors.

The second category is manufacturing variability, where each machine type has its own unique set of variances, such as air gap, magnet Br variance and so on. To some degree, the design variance of the machine and the effect on the Kt can be simulated or measured, and the values of Kt can be stored in a parametrised look-up table to increase the accuracy of the estimated torque.

However, the sheer number of permutations needed to give a torque accuracy comparable to a physical torque value obtained via a rotary torque transducer is incredibly processer-intensive, and is unfeasible for all but the most basic design variables. Also, it should be noted that although there are many techniques to parametrise these variances for a specific machine drive combination, to do so for general-purpose industrial inverters – where there is a need to deliver performance with a huge choice of motor sizes and technologies multiple vendors – is practically impossible.

In traction applications in particular, from a system safety integrity point of view the primary safety goal of the system will be to ensure that there are no unrequested torque disturbances or deliveries that could lead to unintended vehicle accelerations, or in the case of an EV, any regenerative braking capability decelerations. Realising this safety goal based solely on torque estimation derived from current measurement using the Hall effect is not possible, leaving failure modes open.

The physical measurement of motor field currents using Hall effect sensors is prone to issues such as background noise from electromagnetic fields inside the inverter and transient signal effects on the current-carrying cables, which must be filtered out before reading the measured values, and that leads to a reduction in fidelity of the measured values. There is a fine balance between filtering the signals to remove noise and having a signal of sufficient integrity to be able to accurately detect faults in the system.

This issue has become more acute as both drive and machine efficiency have been developed, making it harder to differentiate a potential short-circuit condition from the normal operation of the system. There is also a major issue with signal latency or time delay in measuring the current, which can lead to inaccuracies in the calculated torque, negatively impacting the dynamic performance of the machine.

Why is accurate torque measurement needed?

Despite the present state of the art being to use advanced torque estimation techniques, there are many reasons why it is desirable for accurate torque measurement of a rotating load driven from an e-machine. By measuring rather than estimating the motor torque, it’s possible to calculate load power and thus efficiency independently, where the power of the machine is equal to the measured torque multiplied by the rotational shaft speed, according to the formula:

A good example of this is a motor test dynamometer, where torque accuracy is paramount when characterising the desired load, which could be another e-machine or any rotating load such as a pump or fan. In order to differentiate the characteristics of the load, they need to be decoupled from the inaccuracies of the estimated torque measurement of the e-machine.

To do that, a rotary torque transducer is typically used, the most common of which is an inline rotary torque transducer located between the e-machine and the load via flexible shaft couplings, which are needed to adequately mount the transducer and prevent it from being subject to off-axis bending loads.

There are however several disadvantages with this type of rotary torque transducer, such as cost, shaft alignment, additional mechanical shaft harmonics and balancing constraints as well as their susceptibility to background electromagnetic noise.

The cost of these types of transducer can vary considerably depending on the specific application. For example, a typical 500 Nm transducer rated for 10,000 rpm is several thousand pounds. There are additional costs associated with this type of configuration, such as those associated with the flexible shaft couplings.

Different types of sensor technology are used in these torque transducers. One type are strain gauges with short-range telemetry and inductive power transfer, which use low-cost gauges bonded onto the shaft inside the torque transducer.

The gauges are measured by electronics on the rotating shaft, which transmits the measured signals via the telemetry. In the past, slip rings and brushes were used to get power and signals on and off the shaft, but that has largely been superseded by the use of telemetry and inductive power transfer.

Another type are displacement sensors that use optical or inductive sensing elements to measure the relative position of two rotating discs attached to the shaft inside the transducer. By accurately measuring the position of the two discs the twist angle of the shaft can be calculated, which can then be used to calculate the torque being imposed onto the shaft.

Both approaches require that an element of twist or flex be built into the transducer shaft to create either a shaft strain of a magnitude that can be measured or an amount of angular twist in the shaft that can be measured by the displacement sensors. In both cases, the shaft torsion can lead to system dynamic stability issues during more demanding test cycles.

Displacement sensors have found applications in production torque sensing systems, for example in automotive electric power-assisted steering (EPAS). A small amount of twist in the steering system shaft is required to generate a measurable displacement by the displacement torque sensor, but that leads to a reduction in steering feel or vagueness, which is often a common criticism of vehicles fitted with EPAS. This has meant that for traction motor applications, torque sensing is limited to the laboratory or test track environment.

Integrating a high-speed dynamic torque sensor into the electric motor is desirable but has not been possible owing to the inherent limitations of the torque sensing technology on the market at the moment. So, workaround methods have been developed.

A potential new solution

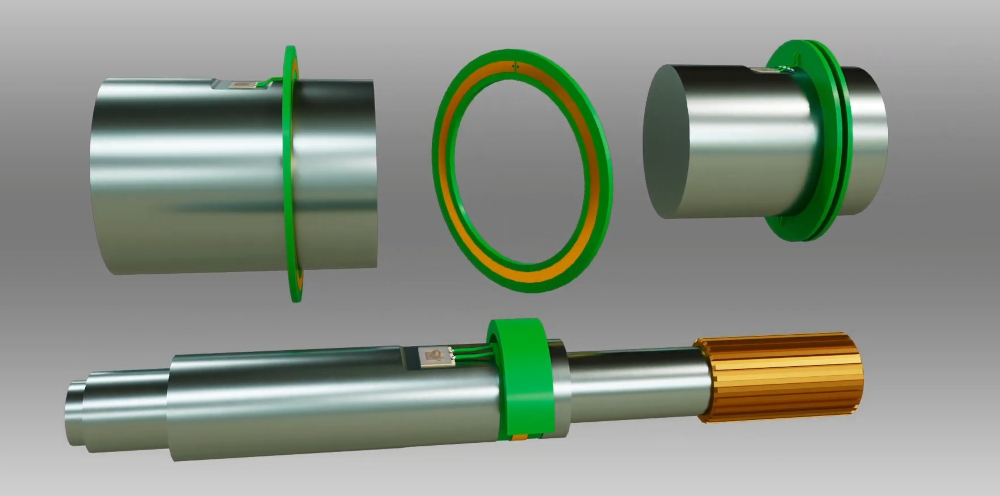

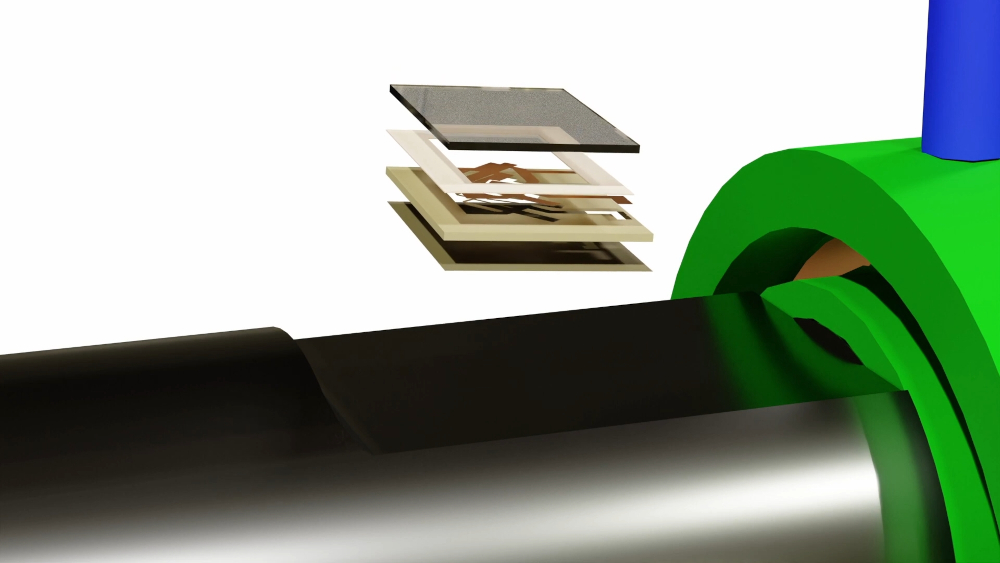

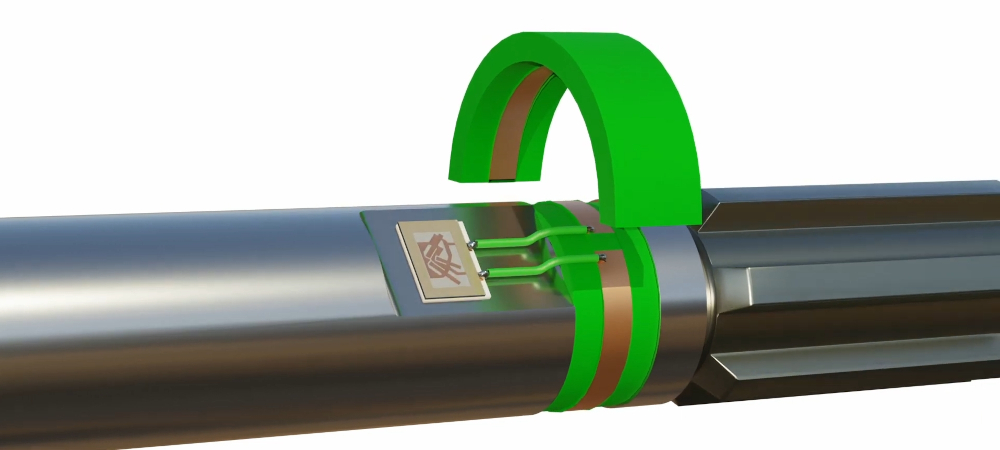

Surface Acoustic Wave (SAW) sensor technology provides a wireless, passive, non-contact sensing system consisting of two main components. The first are SAW sensing elements connected to a close-coupled antenna (also called an RF coupler) mounted on the machine shaft. The second is an electronic interrogation unit called a reader, which is connected to a stationary antenna or RF coupler mounted off the shaft in fairly close proximity to the rotating part.

The reader sends an ultra-low power RF interrogation signal that is transmitted to the SAW element through the antenna or RF coupler. The sensing element does not require any other power source or electronics on the shaft; it works as a passive back-scatterer that reflects the interrogation signal back to the reader.

The back-scattered signal is affected by strain and temperature by a known amount. The reader analyses the received back-scattered signal and calculates the value of the measurement. The antennas can also be configured to act as an inductive speed/position encoder, and the reader electronics can typically be integrated into the motor controller circuitry.

The advantages of this approach are that measurement of micro-strain means no torsion bar is required works, so a stiff system can be maintained. A torque measurement of ±1% can be achieved as well, meaning the torque signal can be brought into the control loop of the motor.

It is also a completely wireless installation, so only small, light and passive components are required on the shaft. It is also immune to background interference and magnetic fields, measurement of torque and temperature is possible at more than 7 kHz, and its compact dimensions mean it can be easily packaged into a complex system.

It does have disadvantages though. It is not available as an off-the-shelf sensor, instead it is a technology that can be applied to a shaft, so it has to be designed into a system, and it requires proprietary Transense SAW devices and an ASIC developed by Transense to allow the measurement of them.

Embedding SAW sensors into an electric motor provides a new possibility for robust, high-speed torque and shaft temperature measurement that is suitable for use in production motor drive systems. These dynamic measurements can be used as a new control parameter to optimise the motor control to improve efficiency and safety integrity, particularly in a highly transient system such as an electric traction drive system.

The approach also reduces the need for design safety factors that consider manufacturing tolerance in production parts, measurement uncertainty for rotor temperature and other key variables, and the dependence on look-up tables for machine control. That would make it was possible to tune the motor control on a ‘live’ basis for any operating condition and mode of the drive system.

Dynamic torque feedback would also provide a dissimilar, comparative reference measurement to the motor phase currents, enabling new safety integrity functionality to be achieved. Although it is not a classical, dual modular redundant circuit – as in two identical sensing circuits – it provides an accurate and dissimilar comparison between the expected current at specific load points and the torque produced, which can deliver a higher safety integrity level.

If the measured current and actual torque produced differ, a fault would be raised and action taken. In effect, that would eliminate many of the issues around managing false-positive error reporting from current signals and signal processing in the control system.

This proposed topology gives an additional degree of fault tolerance with the benefits of accurate torque feedback that provides the benefits of independently reporting motor load performance, as demonstrated above.

Another advantageous technique that can be used is real-time harmonic analysis of the load. Depending on the bandwidth of the current transducer used, it is possible to detect motor and load harmonics, which could be used in predictive failure analysis.

In addition to measuring shaft torque and temperature, it is possible to install additional SAW devices to measure temperature directly on the motor’s rotor. Placing a SAW device close to a magnet provides high-quality verification and continuous monitoring of rotor magnet temperature, which is very difficult to achieve even in a test lab environment with conventional measuring techniques.

SAW sensor technology has proven itself to be robust enough to be integrated into aerospace engines, as well as motorsport transmission systems with exceptional reliability. The relatively simple nature of the sensing element, combined with highly capable manufacturing process technology, means there are very few potential failure modes of the component, and those that do exist can be more easily controlled.

Summary

There are benefits with using low-cost shaft-mounted torque and temperature transducers as opposed to the conventional torque and rotor temperature estimation techniques. The main advantages of using direct torque measurement are intrinsically high-accuracy motor torque reporting, providing a redundant and dissimilar verification, and accurate characterisation of true load profiles where conventional inverter estimation inaccuracies detract from the true torque value.

By using a low-cost shaft-type torque sensor, it is envisaged that there will be far less upfront machine characterisation needed during the initial set-up stage, giving major advantages in terms of a more accurate state of drive motor tuning, allowing for a faster time to market.

ONLINE PARTNERS