Artificial Intelligence

(Image Courtesy of Diabatix)

Network news

Nick Flaherty looks at the latest advances in – and uses for – AI in the electric vehicle market.

There are many uses for machine learning and predictive algorithms, often combined as Artificial Intelligence (AI), in the development and operation of electric vehicles. AI techniques can be used as part of the development process to explore the options across design space much more quickly than an engineer can. They can be run on cloud computing resources, producing more efficient designs for circuit boards or thermal subsystems.

Predictive Kalman algorithms can be used in the central controller of a system to monitor the performance of parts of the system. This can be used to predict when a part might fail, allowing preventive maintenance.

More e-mobility companies are using cloud-based AI for system development, particularly for vision processing. LG for example is using the Microsoft Azure platform to develop advanced driver assistance systems (ADAS), a driver status monitoring camera and a multi-purpose front camera.

Machine learning using neural networks is a popular AI technique at the moment. The networks consist of layers of weighted interconnected nodes, or neurons, to create a convolutional neural network (CNN), and are trained using data.

For image recognition systems, this data is in the form of images, whether it be people, cars, trucks or road signs. The network ‘learns’ by adjusting the impact each neuron has on the final result, known as the weight. In other cases, it could be streams of voltage or current data points, the weights being correlated with particular patterns in the data.

This is often performed in the cloud on Intel parallel processors, with graphics processing unit (GPU) accelerators from Nvidia to create a model. The more data that is used for training, the more accurate the model, although the quality of the model is determined by the quality of the data used for training.

This model is then run on an ‘inference engine’ running on a local microprocessor or GPU in the vehicle, but this is fixed and no longer ‘learns’. Training can still continue in the cloud, even using data fed back from an e-mobility platform. That would update the model, which would then have to be downloaded to the local inference engine.

The common neural networks are Caffe, TensorFlow, Theano, Torch and the Cognitive Toolkit, and typically run on the cloud services of Google, Microsoft, Amazon Web Services and IBM Watson, although they can also be easily implemented in private data centres or individual servers. However, having more processors available for training speeds up the process, which typically involves hundreds of thousands of images or data streams.

Silicon chips have been developed to accelerate these frameworks in both the learning and inference stages. Google has developed dedicated TensorFlow accelerators, while Graphcore is working on a range of devices that can be used to accelerate the network learning in the data centre and also run the inference engine in the end application such as an electric vehicle.

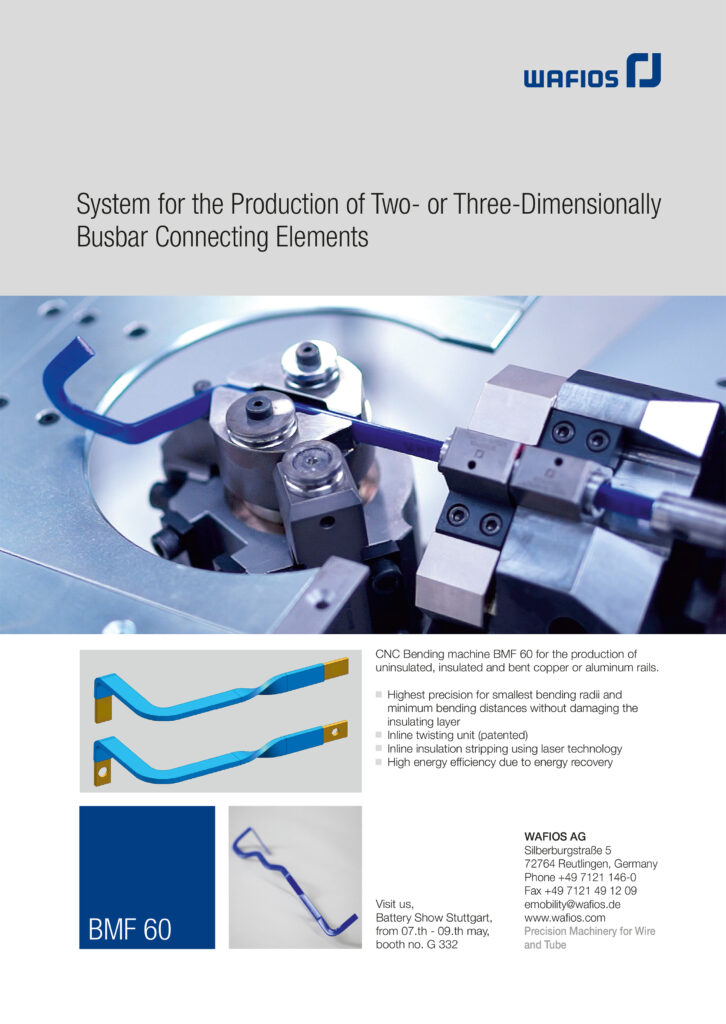

Intel is also developing a different type of processor that is more suited to these algorithms. The ‘neuromorphic’ architecture is meant to mimic the way the human brain works, with a large array of neurons connected in multiple dimensions.

In 2018, Intel demonstrated a test chip called Loihi that has 130,000 neurons and 130 million connections, or synapses. The team behind it showed that this could learn a million times faster than traditional neural network frameworks, and with a thousandth of the power consumption.

In most processors, all the nodes are linked by a clock for synchronous operation. Loihi is based on a fully asynchronous (unclocked) mesh for cores, where each core includes a learning engine that can be programmed to adapt the network parameters during operation, supporting different ways of learning.

The team is also developing algorithms that can run on this new type of chip, including dynamic pattern learning where the system can continue learning from the data that comes in, constantly improving the performance of the AI system.

Another large chip maker, Qualcomm, developed a neuromorphic chip back in 2013 but hasn’t taken the technology into mass production.

Another type of AI algorithm is more predictive and can run in real time on an e-mobility platform. The Kalman algorithm, also known as linear quadratic estimation, uses a series of measurements over time, filters out the noise and estimates the uncertainty.

Measurements

Estimating the probability distribution of the measurements over time has been shown to be more accurate than those based on a single measurement, and allows the system to predict how the measurements are likely to change. This can be used to detect wear and tear in components to allow preventive maintenance, or to monitor the voltage and current of a battery so that it can be replaced before there is a problem.

Diabatix is using machine learning in a different way to explore the design space for components for thermal management.

“We use AI instead of human engineers to design heating and cooling components,” says Hans Klass of Diabatix. “Our tool designs a component and evaluates the design in its own computational fluid dynamics environment to create a new design.

“This is no different to what happens in classical companies; however, their design loop is one or two weeks, while for us it is two to three minutes, so in 72 hours we have 1000 iterations.”

The tool optimises the design space within the laws of physics. Unlike a CNN it is not pattern-based but a learning process. At the start, the system takes a few hours to generate a design for a customer but then it can iterate quickly. All the design iterations are run on the same supercomputer, which has 1000 processing units, so the collective learning from all the different designs for different customers is combined.

“Every design is considered to be individual, but the system becomes better and better at solving the design challenges because of the collective knowledge,” says Klass. “Where a design now takes a day, 18 months ago it would have taken a week.” “We have to run a new design process for every material,” he says.

(Courtesy of Intel)

“We work at the conceptual level with a limited number of iterations and compare five to 10 different materials to see which gives the best price and manufacturability, then select the best one and then go into more detail.

“We support 500 materials but our customers and suppliers can only use particular ones. It becomes very interesting when balancing the thermal behaviour and weight, or the conductivity versus price.

“If you give the system full freedom it will probably design something that’s not manufacturable, so we have incorporated the constraints of different manufacturing techniques. For example, extrusion has much less freedom than milling or 3D printing, but even then there are different optimisations.”

Klass points to an electric racecar with motors in each wheel as an example. “The limiting factor in acceleration and top speed was the heat of the power electronics and the motor, so we redesigned the cooling of the motor so the car could drive for longer at top speed,” he says.

Manufacturing

AI can also be used in the manufacturing line. For example, Acerta in Canada has a cloud-based tool called LinePulse that uses neural networks to analyse in-line and endof-line data in real time and augment existing processes by detecting more faults and predicting failures sooner.

It takes data from each step in the manufacturing and assembly lines, and identify variances in data behaviour to provide alerts on potential defects, increasing yields and reducing scrap.

LinePulse has been used to develop a predictive model for gearbox defects using historical test data from a small sample of gears for a European customer.

(Image courtesy of Nvidia)

For this, the learning model was refined, dropping features that had little or no value to the prediction algorithm, mostly on the shape of the teeth of the gears. By reducing the total features used, the tool also freed up degrees of freedom for estimating the weights for summary features. Several iterations of classification models were carried out using different feature sets, to select the most effective.

By using a dataset from a (small) sample of 104 gearboxes, Acerta was able to show 89% classification accuracy in determining their survival or failure during a warranty period. This detected 40% of the gearboxes that passed their end-of-line tests but failed during their warranty period. Using the AI capability will reduce the warranty expenses from gear tooth fractures by €2 million per year, per plant.

AI is also used within electric vehicles. One area that is immediately suitable for AI is the battery management system (BMS). The BMS from Stafl Systems for example uses machine learning algorithms to monitor the performance of the battery pack over time (see Battery management systems focus, page 24)

These algorithms are currently run in the BMS but Eric Stafl, chief engineer of Stafl Systems, sees this moving up to the central controller in the vehicle. It can be a complex AI system such as the Xavier GPU from Nvidia.

“The algorithm takes the sensing cell voltages and pack current, then runs a neural network,” says Stafl. “Our algorithms learn over time, so that if a cell group is over- or underperforming compared to nominal it will adjust the voltage.

“It’s a variant of machine learning with some parameter updates. You have a model tracking the state of the battery for where you think it is, and see what the error is with reality and adjust to accommodate. That updates the parameters over time using a Kalman filter.”

Chip maker Renesas is also using machine learning for motor controllers. It has added ‘embedded AI’ (e-AI) to its RX66T microcontrollers to monitor small motors that could be used in an electric vehicle.

The e-AI captures data about the motor’s current or rotation rate status, which can be used directly to detect abnormalities in operation. This avoids the need for additional sensors, and one RX66T can monitor four motors in applications such as cooling pumps.

The e-AI software can also be used for preventive maintenance, showing when a motor is behaving outside its normal range. The e-AI software can estimate when repairs and maintenance should be performed, and it can identify the fault locations.

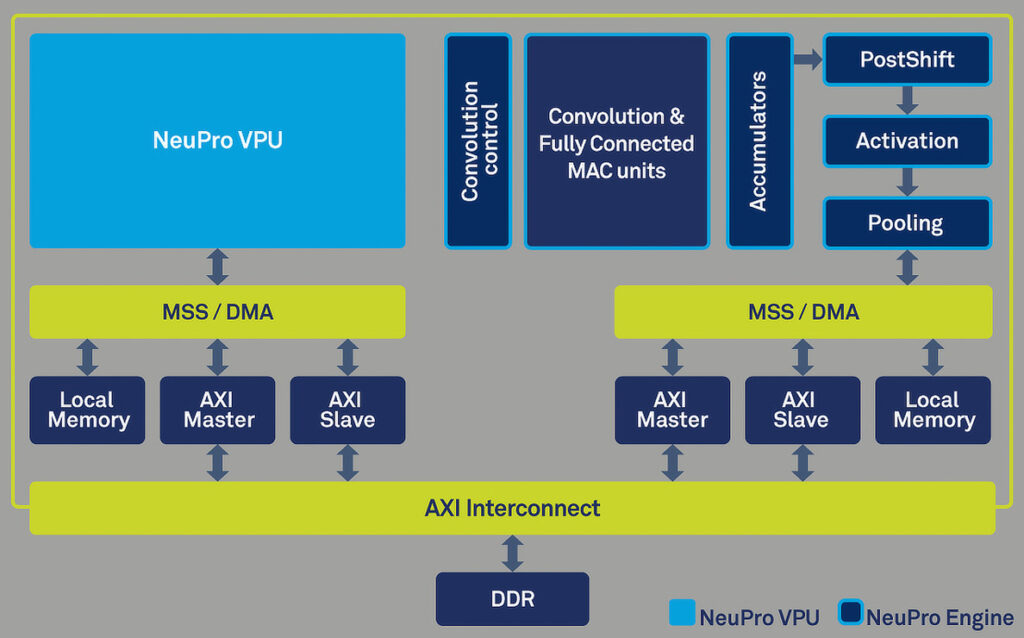

(Courtesy of CEVA)

Developers use the Renesas 24 V motor control evaluation system and an RX66T CPU card to build the system. The hardware is combined with a set of sample program files that run on the microcontroller, as well as a user interface that enables the collection and analysis of data indicating motor states.

To detect faults, it is necessary to learn the characteristics of the normal state. The user interface allows system engineers to develop AI learning and fault-detection models using different types of neural network frameworks.

Once the AI models have been developed, the e-AI development environment (composed of an e-AI translator, e-AI checker and e-AI importer) import the AI models into the RX66T.

“We now have a solution capable of detecting failures that affect the system based on detecting abnormal motor operation,” says Toru Moriya, vice-president of the Home Business Division, Industrial Solutions Business Unit, at Renesas.

“Even in cases where a fault occurs in the motor itself, it can be difficult to localise the source to determine whether there is an abnormality in the motor or the inverter circuit. This new system makes it possible to identify the fault location quickly, which has the potential to dramatically cut the maintenance burden for customers.”

AI capability is also being added to standard microcontrollers, although some cores are optimised for particular frameworks such as TensorFlow. To overcome that, chip designer CEVA has added support for the Open Neural Network Exchange (ONNX) to its neural network compiler

ONNX is an open format created by Facebook, Microsoft and AWS to enable interoperability and portability within the AI community, allowing developers to use the right combinations of tools for their project without being ‘locked in’ to any one framework or ecosystem.

The ONNX standard ensures interoperability between different deeplearning frameworks, giving developers complete freedom to train their neural networks using any machine-learning framework and then deploy it using another AI framework. The support for ONNX and CNN in CEVA’s NeuPro family of neural network processor designs enables developers to import models generated using any ONNXcompatible framework

“We want an open, interoperable AI ecosystem, where AI application developers can take advantage of the features and the ease of use of the various deep-learning frameworks most suitable to their specific use case,” says Ilan Yona, vice-president and general manager of CEVA’s Vision Business Unit.

“By adding ONNX support to our CNN compiler technology, we provide engineers using the NeuPro family of processors with much broader capabilities to train and enrich their neural network-based applications.”

These processors have specialised engines for the matrix multiplication, activation and pooling layers that are common in the different frameworks, as well as a fully programmable vector processor unit for customer extensions, customisation and CDNN compiler. The compiler allows models trained in the cloud to run on the chips in vehicles.

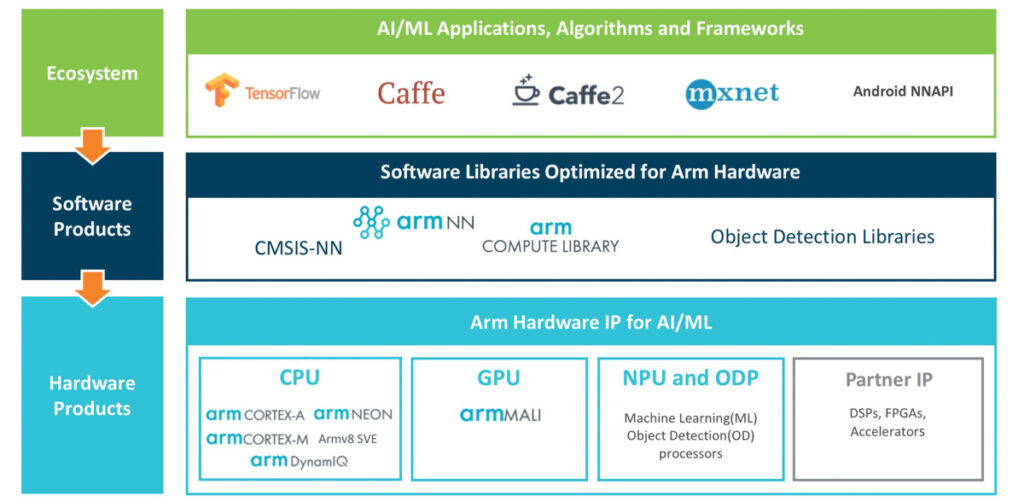

(Courtesy of ARM)

There are four versions, with different levels of parallel processing. The smallest, the NP500, has 512 multiply accumulate (MAC) units that emulate the neurons, but it is the larger NP1000 (with 1024 MAC units) for driver assistance systems and the NP2000 (with 2048 MAC units) and the NP4000 (with 4096 MAC units) that are used for electric vehicle platforms.

CEVA supplies a full development platform with it for chip designers to develop their own controllers that follow safety protocols such as ASIL-D and ISO 26262.

ARM is another supplier of IP to chip developers; it has added AI capabilities to its automotive-class processor cores. The Cortex-A76AE adds functional safety applications such as ADAS and autonomous vehicles with split-lock capability that allows the core to synchronise with other processor cores on a step-by-step basis. This allows the combination of processors to meet industry standards such as ASIL-D and ISO 26262.

Alongside the A76AE, ARM’s Project Trillium aims to develop a processor core optimised for machine learning that would sit alongside its more conventional processors.

ARM has also developed open source neural network software to support Linaro’s Machine Intelligence Initiative, which is used by many e-mobility developers. The software can convert frameworks such as Caffe or TensorFlow to the mainstream ARM processors for different parts of an electric platform.

Summary

Designers are using AI technologies to add significant performance and reliability to e-mobility systems. Neural networks are the mainstream approach, used largely around vision processing and running on standard high-performance processors and graphics processors.

New designs are looking to add neural network acceleration to less powerful processors that can be used in more places in a platform’s design. New types of neuromorphic processors are looking to boost the performance of neural network frameworks while reducing power consumption.

There are other approaches though. Kalman algorithms for example can be developed and optimised to run on more basic processors to again provide predictive analysis of the performance of motors and other electrical subsystems.

ONLINE PARTNERS